Table of contents

Production failures in software are one of the most costly and time-consuming challenges a project can face. It is an incident in the live production environment where the end-user requirements don’t meet as expected. Therefore, it becomes critical to track the production failures since it defines how effective your testing process is. The priority of production defects specifies how quickly you need to fix the issues.

So what can you do to reduce production failures? Well, there are many things that can be done early on and during the software development cycle to mitigate production failures.

In our next incredible session of the Testμ conference, Vinayak Hegde — CTO-in-residence at Microsoft for Startups, and Mohit Juneja — VP, Strategic Partnerships and Business Development at LambdaTest talk about various techniques for reducing software failure risk in production by leveraging different technology and processes like code profiling, observability, static analysis, code coverage, and more.

So, without further ado, let’s dive ahead!

Vinayak Hegde started the session by talking about the software life cycle and its stages: code, build & test, deploy, and production. He mainly emphasized the code and production stage.

Code

Vinayak lists down the five different things about the coding stage.

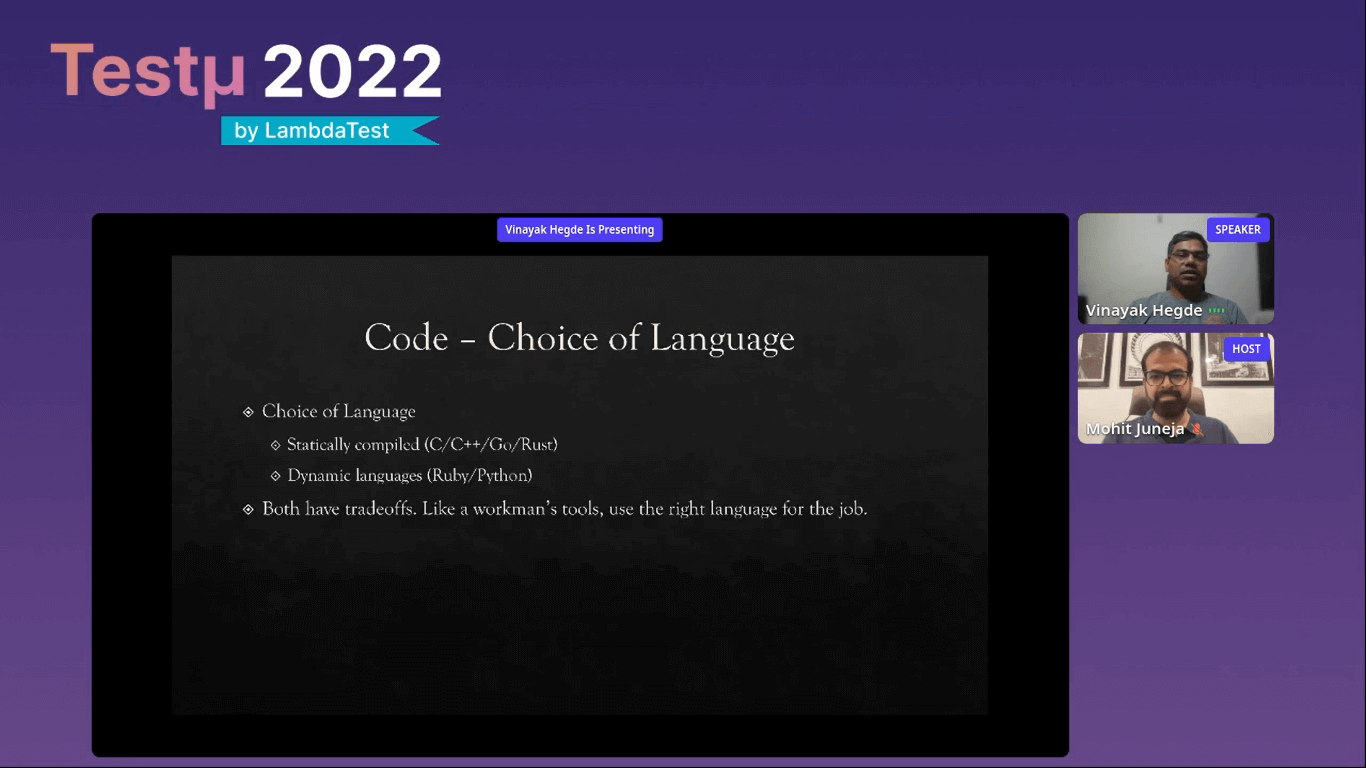

Choice of language: When working with a startup, you may come across a question: how do you choose a tech stack? When you are starting up, you wish to run a build fast and don’t want to have complicated setups. So you want the language and tools to be malleable. Most startups start with Ruby and Python language.

However, they can also choose statically compiled languages like C/C++/C Sharp/Rust. Both dynamic and static tools have tradeoffs. Like a workman’s tool, you must use the right tools for the job.

Design by contract: It is a notion of a contract that extends down to what is like a procedure method or a function level. Every specific function has a contract consisting of the following information:

Acceptable or unacceptable input values or types and their message.

Return value or types and their meanings

Error and exception condition values or types that can occur and their meanings.

Side effects

Pre-conditions

Post-conditions

Invariants

Programming safety: If you look at the overall landscape, you will notice the bugs are associated, especially with old software that is returned with C++, and there are a lot of other issues with memory safety.

When Microsoft researched this vulnerability database, it found that almost 70% of errors can be caught because of memory safety, and the rest can be caught by checking for thread safety.

Rust language is regarded as memory safe and goes out of its way to ensure that concepts like ownership offer specific memory blocks. There is a concept of borrow and checker, which is used to check whenever you’re using a memory or any kind of memory chunk or variable.

Linters and static analysis: It is a tool that analyzes source code to flag programming errors, bugs, stylistic errors, and suspicious constructs. They perform checks like indexing beyond errors, dereferencing null pointers, potentially dangerous data type combinations, and non-portable constructs. Examples of linters are PyLint, Js lint, etc., and they can be easily added to the developer workflow.

Then comes static analysis, which analyzes source code to flag programming errors related to security issues. It performs checks like pattern-based simulation, quality and complexity metrics, best practice recommendations for developers, etc. Examples of static analysis include HelixQAC, Coverity, etc.

Code profilers: They validate the application’s source code to ensure it is optimized, which results in high application performance. It analyzes the memory, CPU, and network utilized by each component of an application. Code profilers are of two types: sampling and instrumentation profilers.

Profilers perform checks like coverage, allocation, resource usage, etc. Some examples of profilers are Gprof, and oprofile.

Vinayak then briefly touched upon the build and test stage, explaining the concepts like load testing, trace testing, and fuzz testing. He then talked about the deployment stage and its two steps: Canary testing and blue/green deployment.

![Reducing Production Failures by Improving Quality: Vinayak Hegde [Testμ 2022]](https://cdn.hashnode.com/res/hashnode/image/upload/v1663930130765/dSkG7Cndc.png?w=1600&h=840&fit=crop&crop=entropy&auto=compress,format&format=webp)